Precision Can Be Calculated From Confusion Matrix Using Which Formula

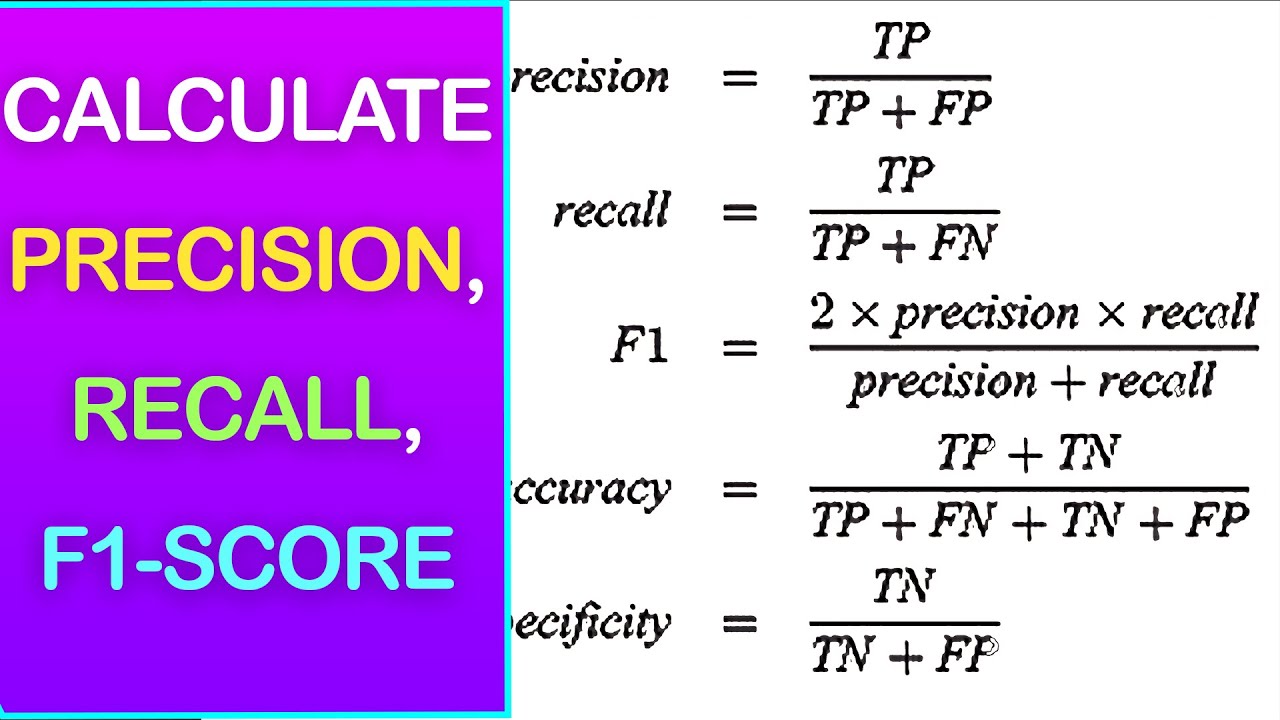

However the use of a confusion matrix goes way beyond just these four metrics. Other basic measures from the confusion matrix.

Micro Average Macro Average Scoring Metrics Python Data Analytics

Formula for calculating precision Replace the values of these terms and calculate the simple math it would be 0636.

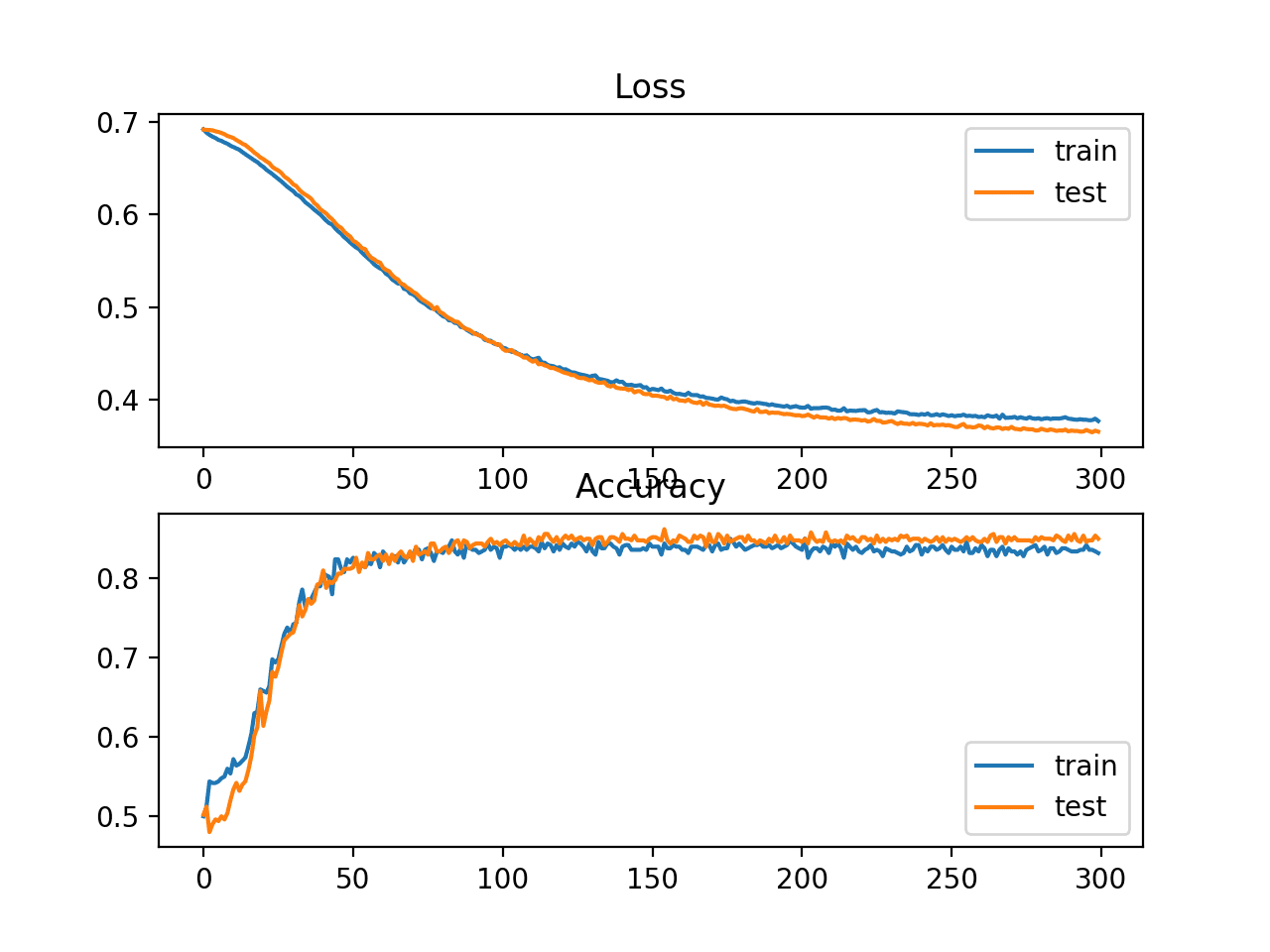

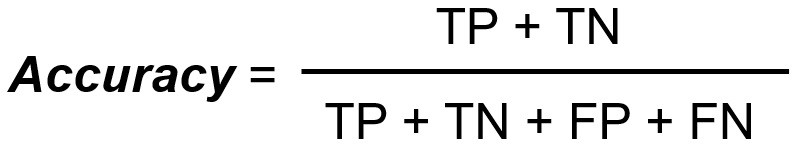

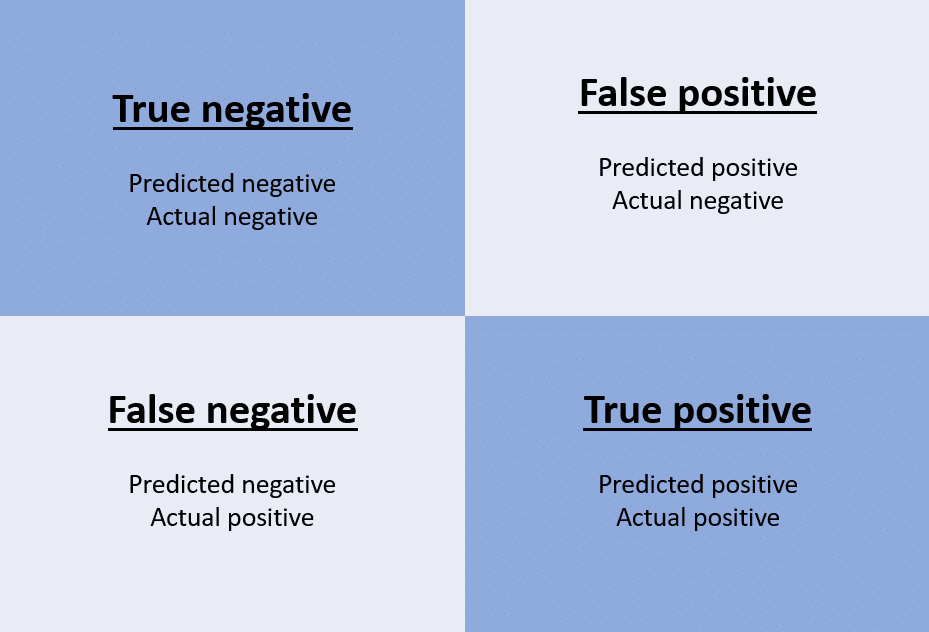

. Accuracy TN TP TN FP FN TP Accuracy can be misleading if used with imbalanced datasets and therefore there are other metrics based on confusion matrix which can be useful for evaluating performance. Higher model precision will mean that most of the Covid-19 positive predictions were actually found to be positive or truly positive. Total positive prediction is sum of true positives and false positives.

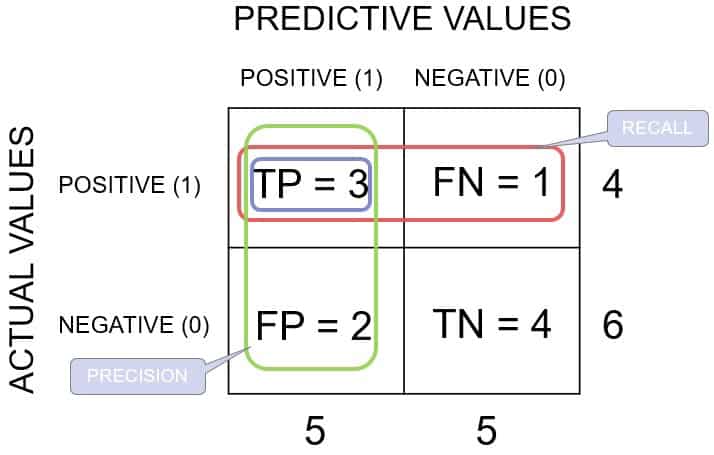

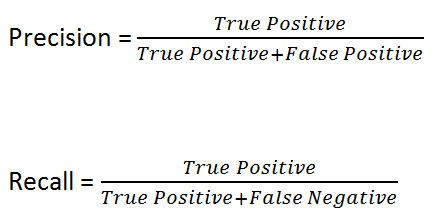

Precision True Positive Total Positive Predictions. The recall must be as high as possible. Fill in the calculatortool with your values andor your answer choices and press Calculate.

Recall tells us about when it is actually yes how often does our classifier predict yes. Then you can click on the Print button to open a PDF in a separate window with the inputs and resultsYou can further save the PDF or print it. The following code snippet computes confusion matrix and then calculates precision and recall.

Or it can also be defined as out of all the positive classes how much our classifier predicted correctly. So for this purpose we can use F-score. It can also be calculated by 1 ERR.

Precision used in document retrievals may be defined as the number of correct documents returned by our ML model. And similarly Ra Rb Rc. AUC is a Area Under ROC curve.

From sklearnmetrics import confusion_matrix gt 112210 pd 111120 cm confusion_matrixgt pd rows gt col pred compute tp tp_and_fn and tp_and_fp wrt all classes tp_and_fn cmsum1 tp_and_fp cmsum0 tp cmdiagonal. Based on the above the formula of precision can be stated as the following. Recall goes another route.

How many patients tested ve are actually ve. On the basis of the above confusion matrix we can calculate the Precision of the model as Precision 100 10010091 Recall. Example of Confusion Matrix Calculating Confusion Matrix using sklearn from sklearnmetrics import confusion_matrix confusion confusion_matrixlabels predictions FN confusion10 TN confusion00 TP confusion11 FP confusion01 You can also pass a parameter normalize to normalize the calculated data.

Precision is the ratio of correctly ve identified subjects by test against all ve subjects identified by test. The formula for calculating precision of your model. For example we can use this function to calculate precision for the scenarios in the previous section.

We can easily calculate it by confusion matrix with the help of following formula Precision fracTPTPFP For the above built binary classifier TP 73 and TPFP 737 80. The accuracy of a model through a confusion matrix is calculated using the given formula below. Precision TPTP FP Recall.

It can be calculated using the below formula. Create the Confusion Matrix. The confusion matrix precision recall and F1 score gives better intuition of prediction results as compared to accuracy.

First the case where there are 100 positive to 10000 negative examples and a model predicts 90 true positives and 30 false positives. Thus the false positive is very. True positive false negative false positive and true negative.

This metric is often used in cases where classification of true positives is a priority. It is defined as the out of total positive classes how our model predicted correctly. We know Precision TPTPFP so for Pa true positive will be Actual A predicted as A ie 10 rest of the two cells in that column whether it is B or C make False Positive.

The precision score can be calculated using the precision_score scikit-learn function. You can see a confusion matrix as way of measuring the performance of a classification machine learning modelIt summarizes the results of a classification problem using four metrics. Hence Precision 7380 0915.

Error costs of positives and negatives are usually different. Accuracy performance metrics can be decisive when dealing with imbalanced data. In this blog we will learn about the Confusion matrix and its associated terms which looks confusing but are trivial.

Next well use the COUNTIFS formula to count the number of values that are 0 in the Actual column and also 0 in the Predicted column. Accuracy is calculated as the total number of two correct predictions TP TN divided by the total number of a dataset P N. The best accuracy is 10 whereas the worst is 00.

The more FPs that get into the mix the uglier that precision is going to look. If there are no bad positives those FPs then the model had 100 precision. Well use a similar formula to fill in every other cell in the confusion matrix.

Pa 1018 055 Ra 1017 059. Steps on how to print your input results. 1 First make a plot of ROC curve by using confusion matrix.

2 Normalize data so that X and Y axis should be in unity. Precision TPTPFP Precision answers the question. Using these four metrics the confusion matrix.

Calculate Accuracy Precision and Recall. Now we calculate three values for Precision and Recall each and call them Pa Pb and Pc. If two models have low precision and high recall or vice versa it is difficult to compare these models.

To calculate a models precision we need the positive and negative numbers from the confusion matrix.

Can Someone Help Me To Calculate Accuracy Sensitivity Of A 6 6 Confusion Matrix

Precision And Recall Definition Deepai

F1 Score Vs Accuracy Which Should You Use Statology

How To Calculate Precision Recall F1 And More For Deep Learning Models

One Minute To Remember The Accuracy Rate And Recall Rate Code World

Create Ckf Calculated Key Figure In Sap Hana Bw Query Eclipse Bw Query Change Management Sap Hana

Odds Ratio Calculation And Interpretation Statistics How To

How To Calculate Precision And Recall

Precision And Recall Learndatasci

How To Calculate F1 Score In R Geeksforgeeks

Decoding The Confusion Matrix Keytodatascience

Python 3 X Calculate Precision And Recall In A Confusion Matrix Stack Overflow

Confusion Matrix Calculator And Formulae

How To Calculate Precision And Recall

Decoding The Confusion Matrix Keytodatascience

Confusion Matrix Accuracy Recall Precision False Positive Rate And F Scores Explained Nillsf Blog

Accuracy Precision Recall Or F1 By Koo Ping Shung Towards Data Science

How To Calculate Precision Recall F1 Score Using Python Sklearn Youtube

Calculation Of Precision Recall And Accuracy In The Confusion Matrix Download Scientific Diagram

Comments

Post a Comment